Recent Posts

- Peace Through Water Desalination

- CPAC Water Policy Interview with KLRN Radio San Antonio Texas

- CPAC Water Interview With California Talk Show Host Rick Trader

- Toward a Green Earth Policy in the era of Trump

- Gates Foundation Water Energy Vision

Recent Comments

- on LLNL Researchers use carbon nanotubes for molecular transport

- on Greenhouses for Desalination

- on American Membrane Technology Association

- on Engineers develop revolutionary nanotech water desalination membrane

- on LLNL Researchers use carbon nanotubes for molecular transport

Archives

- May 2017

- March 2017

- June 2011

- December 2008

- November 2008

- October 2008

- September 2008

- August 2008

- July 2008

- June 2008

- April 2008

- February 2008

- January 2008

- December 2007

- November 2007

- October 2007

- September 2007

- August 2007

- July 2007

- June 2007

- May 2007

- April 2007

- March 2007

- February 2007

- January 2007

- December 2006

- November 2006

- October 2006

- September 2006

- August 2006

- July 2006

- June 2006

Categories

Faxing Pipelines

11th September 2008

An interesting article here. Arizona Mulls New Water Source: Ocean

According to the article:

The water for Arizona’s future needs may lie off the coast of a popular Mexican resort, in the Gulf of California.

State officials are studying the idea of importing filtered ocean water from an as yet unbuilt desalination plant in Puerto Peñasco, 60 miles south of the U.S. border.

Such a project would raise a host of political, economic and environmental issues, and it’s not clear who would pay the construction costs, which could top $250 billion.

Did you read that: 250 billion. That’s with a B. I figure that has to be a typo. But I don’t know.

The New York Times discusses Alaska Governor Palin’s gas pipline from the North Slope. The cost is 40 billion for a 1700 mile pipeline. Its a long way from being built.

Gallon for gallon — gas is more valuable than water. So water pipelines need to be cheaper than gas pipelines. How to do that?

Recently I posted a piece about the importance of cheaply researching (by way of computer modeling)a new kind of energy efficient, easy to manufacture, easy to repair kind of pipeline for shipping water inland 1000 miles and more at little extra cost –beyond the cost of desalination.

There’s another step to the process. So what would happen once you had several different material and design specs for a pipeline in the computer… what then. Well the way to get down costs for a big project is to do a 3D fax of the pipeline–maybe changing the material and design specs as the pipeline snaked its way up through the inland desert.

This technology is already in fast forward.

USC’s ‘print-a-house’ construction technology

Caterpillar, the world’s largest manufacturer of construction equipment, is starting to support research on the “Contour Crafting” automated construction system that its creator believes will one day be able to build full-scale houses in hours.

This technology would easily adapt to the creation of pipelines by way of this extrusion mechanism.

Behrokh Khoshnevis, a professor in the USC Viterbi School of Engineering, says the system is a scale-up of the rapid prototyping machines now widely used in industry to “print out” three-dimensional objects designed with CAD/CAM software, usually by building up successive layers of plastic.

They want to move from plastic to concrete.

“Instead of plastic, Contour Crafting will use concrete,” said Khoshnevis. More specifically, the material is a special concrete formulation provided by USG, the multi-national construction materials company that has been contributing to Khoshnevis’ research for some years as a member of an industry coalition backing the USC Center for Rapid Automated Fabrication Technologies (CRAFT), home of the initiative.

The feasibility of the Contour Crafting process has been established by a recent research effort which has resulted in automated fabrication of six-foot concrete walls.

Consider if they can go from plastic to concrete–it won’t be long before they can do just about any material. Not just any material. Any design as well. They can already extrude walls.

The feasibility of the Contour Crafting process has been established by a recent research effort which has resulted in automated fabrication of six-foot concrete walls.

The project has major backing:

Caterpillar will be a major contributor to upcoming work on the project, according to Everett Brandt, an engineer in Caterpillar’s Technology & Solutions Division, who will work with Khoshnevis. Another Caterpillar engineer, Brian Howson, will also participate in the effort.

The goals for the project are really everything needed to develop pipeline extrusion machines.

Goals for this phase of the project are process and material engineering research to relate various process parameters and material characteristics to the performance of the specimens to be produced. Various experimental and analytical methods will be employed in the course of the research.

Future phases of the project are expected to include geometric design issues, research in deployable robotics and material delivery methods, automated plumbing and electrical network installation, and automated inspection and quality control.

Somebody needs to be developing a pipeline script to be ready when these machines are ready to read the instruction set.

Adapting RO Plants for New Membranes

16th August 2008

John Walp, commissioning manager of the Brackish Groundwater National Desalination Research Facility in Alamogordo, talked to Chamber of Commerce Water Committee members Monday [8/11/08] about new technologies.

The article mentions something about the speed and direction of membrane evolution.

Technological development and innovation are also taking place in membranes used to filter water in reverse osmosis. Large diameter membranes have been found to reduce capital costs. Also, pre-filtration technology development is gaining momentum because it extends the life of expensive membranes.

“If you want to keep replacing membranes, then let raw water through,” Walp said. “If you want expensive membranes to last, you have to treat the water first.”

Pump and energy recovery development is taking place to improve efficiency and reduce energy consumption, Walp said.

In short, the cost of reverse osmosis technology is going down with an influx of new innovation, Walp said.

Today the average cost of water processed by reverse osmosis is $2.27 to $3.03 a gallon. Within five years the cost will be closer to $1.89 a gallon and within 20 years, the cost will drop to and average of 50 cents a gallon

As I’ve mentioned before–I think costs will drop faster. But there will be intermediate steps. These intermediate steps, however, promise to cause quite a headache for planners.

“The California Coastal Commission has cast an historic vote to approve Poseidon Resources’ Carlsbad Desalination Project….The project is on schedule to begin construction the first half of 2009 and delivering drinking water in 2011. ”

Great. That plant may incorporate the latest RO pump developed by Energy Recovery Inc.

But its only great luck that the pump comes along at the same time as the plant is in its planning stages.

What happens when an RO technology comes along that goes against the latest engineering model. Notice above that pretreatment is getting more traction as a way to extend the life of membranes?

Likely, Poseiden’s new Carlesbad project will adopt some kind of pretreatment. A popular pretreatment is to chlorinate water before its passed through the membrane. The chlorine kills the wee beasties & algae that foul membranes. But then the chlorine is also removed–before it passes throught the membrane — because chlorine also tends to degrade membranes faster.

Doesn’t that look like a complicated cad.

What happens if you get a membrane that requires plant design changes.

Well, as it happens, a new chlorine tolerant membrane from the University of Texas at Austin has been developed. However, its not clear as to whether this is an improvement on the membrane designed by UCLA’s Eric Hoek announced two years ago. The chlorine tolerant membrane would cut step two out of the process. But Eric’s membrane might cut steps one and two out of the process. The chlorine membrane is not ready for prime time–nor has any date been set for it to go into commercial production. Eric Hoek’s membrane is further along. It is slated for commercial production in late 2009/early2010.

As of now Poseiden can’t cad either into current engineering specs.

But since the new membranes cut out a step or two of pretreatment — there should be a way to write this into the specs for the plant. Likely Poseiden has the tools for this. I would suggest two. I mentioned both here a couple years back. The first by Autodesk “enables customers to create designs based on the functional requirements of a product before they commit to complex model geometry, allowing designers to put function before form.” The second tool developed by MIT would enable you to cost out retooling the Poseiden plant for a new membrane. According to the article I posted here back in 2006 “The model allows companies and organizations to develop more accurate bid proposals, thereby eliminating excess “cost overrun” padding that is often built into these proposals.”

Of course the sort of plant design changes caused by teams at UT Austin and UCLA will pale in comparison to the LLNL membranes–but that’s for another year.

Computer Modeling of Water in Pipelines

11th July 2008

The environmentalist case for using wind and solar now dovetails with national security concerns for getting off dependence on foreign oil. You can see it in T-Boone Pickens ads on tv now. Something similar is afoot with water. Only in this case rising sea levels would seem to force the same change as falling rainfall in desert regions. Read this LA Times article about environmentalist Carl Hodges making the environmental case for shipping seawater inland to neutralize sea-level rise (as well as raise food and fuel.)

Hodges only wants to ship seawater a little way inland. I don’t think he quite understands the technological revolution underway currently.

In May 2007 I posted that IBM Predicts Big Changes in Water Production & Distribution in 5 Years

imho in order to have a successful 21st century water policy– desalinated water from the ocean will need to be piped to deserts 1000 miles inland on a vast scale. In order for this to be done economically — a way needs to be devised to cheaply create in bulk very low maintenance pipes that push water uphill over long distances with little or no added energy cost. In order to cheaply invent these pipes–a computer modeling system will have to be undertaken.

There are three variables that I can think of right off that might be modeled to push water uphill passively: some variation of hydrophobic vs hydrophillic material inside the pipe. Some variation of heat & cold conduction from the outside to the inside of the pipe. Some variation of shape inside the pipe. Nor is it clear that a pipe needs to be completely hollow. A redwood tree pushes immense amounts of water straight up daily. In fact, according to this physorg article tree branching key to efficient flow in nature and novel materials. Finally, some allowance for solar energy to be used for pumping can be made for early models as the cost of solar power falls under the cost of coal in the next few years.

What would be the algorithms to use in the computer models? First of all, I think that materials simulations are already well understood. What may not be so well understood is the flow of water across complex materials & surfaces and the interaction of that in a pipe. So the idea is to find algorithms that enable researchers to test new materials either singly or in combination with others–and with different shapes– as they interact with water in a pipe. What algorithms? NIST is going come out with a new library of mathematical references.

NIST releases preview of much-anticipated online mathematics reference

That’s a whole library of equations on which to base algorithms.

My suggestion would be three formulas. These are not algorithms. But they could be incorporated into algorithms. One formula models the flow of water over complex shapes and variable materials. Another formula models water in a pipe. A third models how fluids separate from a surface under certain conditions See below.

140-year-old math problem solved by researcher

Academic makes key additions to the Schwarz-Christoffel formula

A problem which has defeated mathematicians for almost 140 years has been solved by a researcher at Imperial College London.

Professor Darren Crowdy, Chair in Applied Mathematics, has made the breakthrough in an area of mathematics known as conformal mapping, a key theoretical tool used by mathematicians, engineers and scientists to translate information from a complicated shape to a simpler circular shape so that it is easier to analyse.

This theoretical tool has a long history and has uses in a large number of fields including modelling airflow patterns over intricate wing shapes in aeronautics. It is also currently being used in neuroscience to visualise the complicated structure of the grey matter in the human brain.

A formula, now known as the Schwarz-Christoffel formula, was developed by two mathematicians in the mid-19th century to enable them to carry out this kind of mapping. However, for 140 years there has been a deficiency in this formula: it only worked for shapes that did not contain any holes or irregularities.

Now Professor Crowdy has made additions to the famous Schwarz-Christoffel formula which mean it can be used for these more complicated shapes. He explains the significance of his work, saying:

“This formula is an essential piece of mathematical kit which is used the world over. Now, with my additions to it, it can be used in far more complex scenarios than before. In industry, for example, this mapping tool was previously inadequate if a piece of metal or other material was not uniform all over – for instance, if it contained parts of a different material, or had holes.”

Professor Crowdy’s work has overcome these obstacles and he says he hopes it will open up many new opportunities for this kind of conformal mapping to be used in diverse applications.

“With my extensions to this formula, you can take account of these differences and map them onto a simple disk shape for analysis in the same way as you can with less complex shapes without any of the holes,” he added.

Professor Crowdy’s improvements to the Schwarz-Christoffel formula were published in the March-June 2007 issue of Mathematical Proceedings of the Cambridge Philosophical Society.

http://www.rdwaterpower.com/2006/10/06/carbon-nanotube-research/

Navier-Stokes Equation Progress?

Penny Smith, a mathematician at Lehigh University, has posted a paper on the arXiv that purports to solve one of the Clay Foundation Millenium problems, the one about the Navier-Stokes Equation. The paper is here, and Christina Sormani has set up a web-page giving some background and exposition of Smith’s work.

Wikipedia describes Navier-Stokes Equations this way:

They are one of the most useful sets of equations because they describe the physics of a large number of phenomena of academic and economic interest. They are used to model weather, ocean currents, water flow in a pipe, motion of stars inside a galaxy, and flow around an airfoil (wing). They are also used in the design of aircraft and cars, the study of blood flow, the design of power stations, the analysis of the effects of pollution, etc. Coupled with Maxwell’s equations they can be used to model and study magnetohydrodynamics.

MIT solves 100-year-old engineering problem

Elizabeth A. Thomson, News Office

September 24, 2008

The green ‘wall’ in this 3D movie shows where a fluid is separating from the surface it is flowing past as predicted by a new MIT theory. MIT scientists and colleagues have reported new mathematical and experimental work for predicting where that aerodynamic separation will occur. Click here to read more

……………………………

When these equations pass peer review they’ll be very helpful in algorithms that model fluids flowing in a pipe.

Heat Transfer Efficiency For Boiling Water Increased 30 Fold

30th June 2008

Jeez, here’s still another amazing innovation. Get this. A couple of researchers at Rensselaer Polytechnic Institute have figured out how to make water boil at a 30 fold increase in the number of bubbles created per unit of energy. That means that energy costs to create steam would drop by 30 fold. This process “could translate into considerable efficiency gains and cost savings if incorporated into a wide range of industrial equipment that relies on boiling to create heat or steam.”

Ya think one of them might be desalinaton? Hmm well also there is the Kanzius effect. An efficient heat transfer process there might make the 3000 degree flame net energy for the process. As well you might be able to get more steam for less energy to reduce costs of a kanzius steam reformation process. or efficiently boiled water might be injected into gypsum deposits. imho the salt would play hell on the nanorods that coat the copper sufaces mentioned in the article below. but if you could desalt and heat the water before it hit the nanorod copper plates the steam could be used to drive electrical generation more efficiently to reduce costs of membrane desalination. Finally, a word about pipelines. Maybe an efficient heat transfer material in combination with hydrophobic materials would enable cheaper ways to push water uphill in a pipe. Anyhow check out the article below. Interesting stuff. There’s a Rensselaer Polytechnic Institute Pr

As well as the write up below in PhyOrg.

Anyhow consider the article below.

| New nano technique significantly boosts boiling efficiency |

A scanning electron microscope shows copper nanorods deposited on a copper substrate. Air trapped in the forest of nanorods helps to dramatically boost the creation of bubbles and the efficiency of boiling, which in turn could lead to new ways of cooling computer chips as well as cost savings for any number of industrial boiling application. Credit: Rensselaer Polytechnic Institute/ Koratkar

Whoever penned the old adage “a watched pot never boils” surely never tried to heat up water in a pot lined with copper nanorods. |

| A new study from researchers at Rensselaer Polytechnic Institute shows that by adding an invisible layer of the nanomaterials to the bottom of a metal vessel, an order of magnitude less energy is required to bring water to boil. This increase in efficiency could have a big impact on cooling computer chips, improving heat transfer systems, and reducing costs for industrial boiling applications.

“Like so many other nanotechnology and nanomaterials breakthroughs, our discovery was completely unexpected,” said Nikhil A. Koratkar, associate professor in the Department of Mechanical, Aerospace, and Nuclear Engineering at Rensselaer, who led the project. “The increased boiling efficiency seems to be the result of an interesting interplay between the nanoscale and microscale surfaces of the treated metal. The potential applications for this discovery are vast and exciting, and we’re eager to continue our investigations into this phenomenon.” Bringing water to a boil, and the related phase change that transforms the liquid into vapor, requires an interface between the water and air. In the example of a pot of water, two such interfaces exist: at the top where the water meets air, and at the bottom where the water meets tiny pockets of air trapped in the microscale texture and imperfections on the surface of the pot. Even though most of the water inside of the pot has reached 100 degrees Celsius and is at boiling temperature, it cannot boil because it is surrounded by other water molecules and there is no interface — i.e., no air — present to facilitate a phase change. Bubbles are typically formed when air is trapped inside a microscale cavity on the metal surface of a vessel, and vapor pressure forces the bubble to the top of the vessel. As this bubble nucleation takes place, water floods the microscale cavity, which in turn prevents any further nucleation from occurring at that specific site. Koratkar and his team found that by depositing a layer of copper nanorods on the surface of a copper vessel, the nanoscale pockets of air trapped within the forest of nanorods “feed” nanobubbles into the microscale cavities of the vessel surface and help to prevent them from getting flooded with water. This synergistic coupling effect promotes robust boiling and stable bubble nucleation, with large numbers of tiny, frequently occurring bubbles. “By themselves, the nanoscale and microscale textures are not able to facilitate good boiling, as the nanoscale pockets are simply too small and the microscale cavities are quickly flooded by water and therefore single-use,” Koratkar said. “But working together, the multiscale effect allows for significantly improved boiling. We observed a 30-fold increase in active bubble nucleation site density — a fancy term for the number of bubbles created — on the surface treated with copper nanotubes, over the nontreated surface.” Boiling is ultimately a vehicle for heat transfer, in that it moves energy from a heat source to the bottom of a vessel and into the contained liquid, which then boils, and turns into vapor that eventually releases the heat into the atmosphere. This new discovery allows this process to become significantly more efficient, which could translate into considerable efficiency gains and cost savings if incorporated into a wide range of industrial equipment that relies on boiling to create heat or steam. “If you can boil water using 30 times less energy, that’s 30 times less energy you have to pay for,” he said. The team’s discovery could also revolutionize the process of cooling computer chips. As the physical size of chips has shrunk significantly over the past two decades, it has become increasingly critical to develop ways to cool hot spots and transfer lingering heat away from the chip. This challenge has grown more prevalent in recent years, and threatens to bottleneck the semiconductor industry’s ability to develop smaller and more powerful chips. Boiling is a potential heat transfer technique that can be used to cool chips, Koratkar said, so depositing copper nanorods onto the copper interconnects of chips could lead to new innovations in heat transfer and dissipation for semiconductors. “Since computer interconnects are already made of copper, it should be easy and inexpensive to treat those components with a layer of copper nanorods,” Koratkar said, noting that his group plans to further pursue this possibility. Source: Rensselaer Polytechnic Institute |

The last time I wrote about the researchers at LLNL was back in December 2006. Their carbon nanotube research is the most promising imho of a half dozen interesting lines of research that I’ve seen. That is, the goal of membrane research is a to have a pipe that ends in in a covered mushroom shape that rises above the ocean floor in 50-100 feet of salt water–someplace where there is a strong coastal current. Fresh water filters through a membrane without extra energy and falls through some kind gas that’s hostile to aerobic and anaerobic bacteria–like maybe chlorine. This research provides a path to that goal.

In the initial discovery, reported in the May 19, 2006 issue of the journal Science, the LLNL team found that water molecules in a carbon nanotube move fast and do not stick to the nanotube’s super smooth surface, much like water moves through biological channels. The water molecules travel in chains – because they interact with each other strongly via hydrogen bonds.

Of course one of the most promising applications for this process is seawater desalination.

These membranes will some day be able to replace conventional membranes and greatly reduce energy use for desalination.

The current study looked at the process in more detail.

In the recent study, the researchers wanted to find out if the membranes with 1.6 nanometer (nm) pores reject ions that make up common salts. In fact, the pores did reject the ions and the team was able to understand the rejection mechanism.

What was the rejection mechanism?

Fast flow through carbon nanotube pores makes nanotube membranes more permeable than other membranes with the same pore sizes. Yet, just like conventional membranes, nanotube membranes exclude ions and other particles due to a combination of small pore size and pore charge effects.

But it was principally charge that did the deed.

“Our study showed that pores with a diameter of 1.6nm on the average, the salts get rejected due to the charge at the ends of the carbon nanotubes,” said Francesco Fornasiero, an LLNL postdoctoral researcher, team member and the study’s first author.

The salinity of the water studied was much lower than brackish water. So work will need to be done to figure out how to increase the charge at the tip of the nanotubes. Might be good to highly charge the filler material. Or put imperfections in the carbon nanotubes to increase their charge. In this blog i mention that charge might be related to something else. Here’s still another take on charge. Might be good work for simulations. Earlier work last fall showed a nice congruence between experimental work and computer models.

Finally Siemans recently announced that they had developed a process that would cut energy use in half. Their method involved removing salt using an electric field. So an interesting way to “artificially” introduce a larger charge for higher salt concentrations would be to create a small electric field along the surface of the carbon nanotube. this of course, costs energy. But it would make an interesting interim step.

As well, its helpful to mention that the study just announced by LLNL was not about how water flowed through the membrane but rather the experiment was designed to more precisely peg the mechanism by which salt rejection took place at the carbon nanotube’s tip. So the animation in the press release is a bit misleading

Some further study of the process by which water flowed through the nanotubes was done by Jason Holt.

A while back I asked a member of the LLNL team what the best investment of dollars would be for research in this field. He said that the best investment currently would be “in coming up with scalable (economical) processes for producing membranes that use nanotubes or other useful nanomaterials for desalination.”

Here is a link to the LLNL press release.

Water from Gypsum By Steam Injection

14th June 2008

Here’s one one interesting idea for getting water to desert regions. Consider gypsum. There’s lots of it in the southwest. The chemical formula for gypsum is CaSO4.2H2O. Notice the H20 on the end? Gypsum is 20% water by weight. Did you know that you can quickly cook the water out of gypsum at 212F degrees 100C . Gypsum occurs in flat planes often not far from the surface–especially in old dry lakebeds. You could cook those planes. Leaving a mineral residue called bassanite–water would percolate up and the earth would subside causing a lake. Think you could find a heat source in the desert? Maybe flared off gas? Maybe solar power? Maybe a coal plant somewhere. 212 degrees isn’t too hot. Hmm 212 is a familiar number. You might use steam.

A Dutch team has already done the initial testing. Holland Innovation Team is planning a pilot study in a desert location. They don’t say where. They don’t say how they’re going to extract the water either. See below

But before you go. Consider. There’s a group of men parked outside of Heartbreak Hotel. Specifically Shell’s experimental in situ oil shale facility, Piceance Basin, Colorado. They climb up to their beds every night. Every night they toss and turn. In the morning they go out to a set of cool tools they’ve developed to extract oil from oil shale using steam injection. There’s several other processes that involve superheated air and others. See the list here. (As well for surface gypsum —concentrated solar might be appropriate.) Anyhow, they’re all revved up and ready to go but congress (specifcally a senator from colorado)is telling them they have to sit on their thumbs and think about it. (For that matter the BLM is holding up a lot of solar development.)

Someone might find these guys and say hey. While you wait. You can can use your cool tools on our gypsum. Funding should be easy.

The water from gypsum looks to be relatively expensive. But certainly it would be fraction of the cost of oil from oil shale since the oil shale requires 600+ degrees heat (vs 212F for gypsum) to cook out the oil and the deposits are usually 1000 feet down (vs at or near the surface for gypsum). And there’s no clean up or refining. For some desert valleys water from gypsum would be a fail safe water source.

Anyhow read the article below and consider.

Public release date: 11-Jun-2008

Contact: Peter van der Gaag

pvdgaag@hollandinnovationteam.nl

Inderscience Publishers

Rocky water source

Water from rock, easier than blood from stone

Gypsum, a rocky mineral is abundant in desert regions where fresh water is usually in very short supply but oil and gas fields are common. Writing in International Journal of Global Environmental Issues, Peter van der Gaag of the Holland Innovation Team, in Rotterdam, The Netherlands, has hit on the idea of using the untapped energy from oil and gas flare-off to release the water locked in gypsum.

Fresh water resources are scarce and will be more so with the effects of global climate change. Finding alternative sources of water is an increasingly pressing issue for policy makers the world over. Gypsum, explains van der Gaag could be one such resource. He has discussed the technology with people in the Sahara who agree that the idea could help combat water shortages, improve irrigation, and even make some deserts fertile.

Chemically speaking, gypsum is calcium sulfate dihydrate, and has the chemical formula CaSO4.2H2O. In other words, for every unit of calcium sulfate in the mineral there are two water molecules, which means gypsum is 20% water by weight.

van der Gaag suggests that a large-scale, or macro, engineering project could be used to tap off this water from the vast deposits of gypsum found in desert regions, amounting to billions of cubic meters and representing trillions of liters of clean, drinking water.

The process would require energy, but this could be supplied using the energy from oil and gas fields that is usually wasted through flaring. Indeed, van der Gaag explains that it takes only moderate heating, compared with many chemical reactions, to temperatures of around 100 Celsius to liberate water from gypsum and turn the mineral residue into bassanite, the anhydrous form. “Such temperatures can be reached by small-scale solar power, or alternatively, the heat from flaring oil wells can be used,” he says. He adds that, “Dehydration under certain circumstances starts at 60 Celsius, goes faster at 85 Celsius, and faster still at 100 degrees. So in deserts – where there is abundant sunlight – it is very easy to do.”

van der Gaag points out that the dehydration of gypsum results in a material of much lower volume than the original mineral, so the very process of releasing water from the rock will cause local subsidence, which will then create a readymade reservoir for the water. Tests of the process itself have proved successful and the Holland Innovation Team is planning a pilot study in a desert location.

“The macro-engineering concept of dewatering gypsum deposits could solve the water shortage problem in many dry areas in the future, for drinking purposes as well as for drip irrigation,” concludes van der Gaag.

“Mining water from gypsum” in International Journal of Global Environmental Issues, 2008, 8, 274- 281

Public release date: 11-Jun-2008

Contact: Peter van der Gaag

pvdgaag@hollandinnovationteam.nl

Inderscience Publishers

Rostum Roy’s Work With Kanzius Effect

18th April 2008

Rostum Roy’s Work With Kanzius Effect

Rostum Roy J Rao And J. Kanzuius have published a paper jointly entitled “Observations of polarised RF radiation catalysis of dissociation of H2O–NaCl

solutions” on the Kanzuius effect in Materials Research Innovations, Volume 12, Number 1, March 2008 , pp. 3-6(4). (You’ll have to do a search at the links provided to pull up the pdf) This work basically confirms the information posted last year here and here. Note how the size of the flame varies with the concentration of NaCl. From the article

Figure 1 shows a very simple view of the variation of the flame size with the concentration of the solution. At 3% (about sea water concentration) the results presented in the YouTube video are essentially confirmed. Larger flame sizes of about 4–5 inches are noted with higher concentrations of NaCl. Immediately after the RF power is turned ‘ON’, the flammable gas can be ignited.

The flame shuts ‘OFF’ instantly as soon as the RF power is shut off. In the experiments to determine the effect of concentration, the authors were able to show

that even 1 wt-%NaCl sustains a small flame continuously. Also used were concentrations close to saturation with NaCl that produce somewhat larger flames as can be seen in Fig. 1. A solid sustainable flame is obtained at all percentages of NaCl.1%.

Rudimentary attempts were made to measure the temperature of the flame – they agree with more detailed measurements

made by Dr Curley at M.D. Anderson, which place it at y1800uC.39

Conclusions It has been confirmed that polarised RF frequency radiation at 13.56 MHz causes NaCl solutions in water,

with concentrations from 1 to over 30%, to be measurably changed in structure, and to dissociate into hydrogen and oxygen near room temperature. The flame

is a burning reaction, probably of an intimate mixture of hydrogen oxygen and the ambient air. Most of the Na present in the solution, concentrates progressively – as

measured – as the water is dissociated and burned.

No claim has been made that the process nets energy. However, thing of interest here is that flame produced increases with the concentration of the NaCl. And further the higher the concentration of NaCl the higher the flame.

As mentioned in this blog on forward osmosis put out the WaterReuse Foundation–one special use for the Kanzius effect would be to flare off the water from concentrated brine after forward–or reverse–osmosis…while providing and additional source of power to net lower the energy cost.

Lake Meade II

17th February 2008

Last April the New York Times ran an article on the western drought. However, here’s the first official study I’ve seen of the effects of rising demand and falling supply on Lake Meade. Its put out by Scripps Institution of Oceanography, UC San Diego. Perhaps reports like this were why Patricia Mulroy, General Manager of the Southern Nevada Water Authority communicated the urgent need for action over the next 10 years at the MSSC in January. Across the net you could find people who would dispute the idea that there is falling supply. But no one disputed that there is rising demand. And no one suggested that there is rising supply except for the pop in snowfall in the Sierra Nevadas & the Rockies in January. Desalination and reclamation are mentioned as two promising but expensive alternatives. (See the graph at the bottom for 50 years snowpack trends and discussions of sunspot activity which include the first observations of cycle 24 in January.)

Lake Mead could be dry by 2021

| Lake Mead could be dry by 2021 |

|

A map of the Colorado River basin. Credit: Scripps Institution of Oceanography, UC San Diego

There is a 50 percent chance Lake Mead, a key source of water for millions of people in the southwestern United States, will be dry by 2021 if climate changes as expected and future water usage is not curtailed, according to a pair of researchers at Scripps Institution of Oceanography, UC San Diego.

Without Lake Mead and neighboring Lake Powell, the Colorado River system has no buffer to sustain the population of the Southwest through an unusually dry year, or worse, a sustained drought. In such an event, water deliveries would become highly unstable and variable, said research marine physicist Tim Barnett and climate scientist David Pierce.

Barnett and Pierce concluded that human demand, natural forces like evaporation, and human-induced climate change are creating a net deficit of nearly 1 million acre-feet of water per year from the Colorado River system that includes Lake Mead and Lake Powell. This amount of water can supply roughly 8 million people. Their analysis of Federal Bureau of Reclamation records of past water demand and calculations of scheduled water allocations and climate conditions indicate that the system could run dry even if mitigation measures now being proposed are implemented.

The paper, “When will Lake Mead go dry?,” has been accepted for publication in the peer-reviewed journal Water Resources Research, published by the American Geophysical Union (AGU).

“We were stunned at the magnitude of the problem and how fast it was coming at us,” said Barnett. “Make no mistake, this water problem is not a scientific abstraction, but rather one that will impact each and every one of us that live in the Southwest.”

“It’s likely to mean real changes to how we live and do business in this region,” Pierce added.

The Lake Mead/Lake Powell system includes the stretch of the Colorado River in northern Arizona. Aqueducts carry the water to Las Vegas, Los Angeles, San Diego, and other communities in the Southwest. Currently the system is only at half capacity because of a recent string of dry years, and the team estimates that the system has already entered an era of deficit.

“When expected changes due to global warming are included as well, currently scheduled depletions are simply not sustainable,” wrote Barnett and Pierce in the paper.

Barnett and Pierce note that a number of other studies in recent years have estimated that climate change will lead to reductions in runoff to the Colorado River system. Those analysis consistently forecast reductions of between 10 and 30 percent over the next 30 to 50 years, which could affect the water supply of between 12 and 36 million people.

The researchers estimated that there is a 10 percent chance that Lake Mead could be dry by 2014. They further predict that there is a 50 percent chance that reservoir levels will drop too low to allow hydroelectric power generation by 2017.

The researchers add that even if water agencies follow their current drought contingency plans, it might not be enough to counter natural forces, especially if the region enters a period of sustained drought and/or human-induced climate changes occur as currently predicted.

Barnett said that the researchers chose to go with conservative estimates of the situation in their analysis, though the water shortage is likely to be more dire in reality. The team based its findings on the premise that climate change effects only started in 2007, though most researchers consider human-caused changes in climate to have likely started decades earlier. They also based their river flow on averages over the past 100 years, even though it has dropped in recent decades. Over the past 500 years the average annual flow is even less.

“Today, we are at or beyond the sustainable limit of the Colorado system. The alternative to reasoned solutions to this coming water crisis is a major societal and economic disruption in the desert southwest; something that will affect each of us living in the region” the report concluded.

Source: University of California – San Diego

This news is brought to you by PhysOrg.com

///////////////

Study: Climate Change Escalating Severe Western Water Crisis

While the above article suggests that climate change is associated man made carbon dioxide loading of the atmosphere NASA suggest that the cause of warming has historically been the sunspot cycle

Here’s picture of the sunspot cycle in the last three hundred years . Here’s a NASA picture of sunspot activity from 1995-2015. The first observations of cycle 24 projected in the picture of sunspot activity were made in January.

The Materials Research Society

08th February 2008

Last November The Materials Research Society held a Symposium V: Materials Science of Water Purification. Their website is a good place to go for anyone interested in getting to know the players in cutting edge desalination related materials research. Presenters include Jason Holt, Rustum Roy, Erik Hoek .

On Tuesday, March 25, 2008 the LLNL team that developed the carbon nano tube membrane will be on three panels at the Materials Research Society in San Francisco. According to the schedule Wednesday is dedicated to topics that show the possibility of more commercial applicability. During the morning at 9:30 AM the LLNL team will be included on a panel with UC Berkely & UC Davis scientists that discusses Mechanism of Ion Exclusion by Sub-2nm Carbon Nanotube Membranes. My guess would be that this panel will discuss charge. But maybe not. Here is the 2008 MRS Spring Meeting program in html format. Registration online is available here.

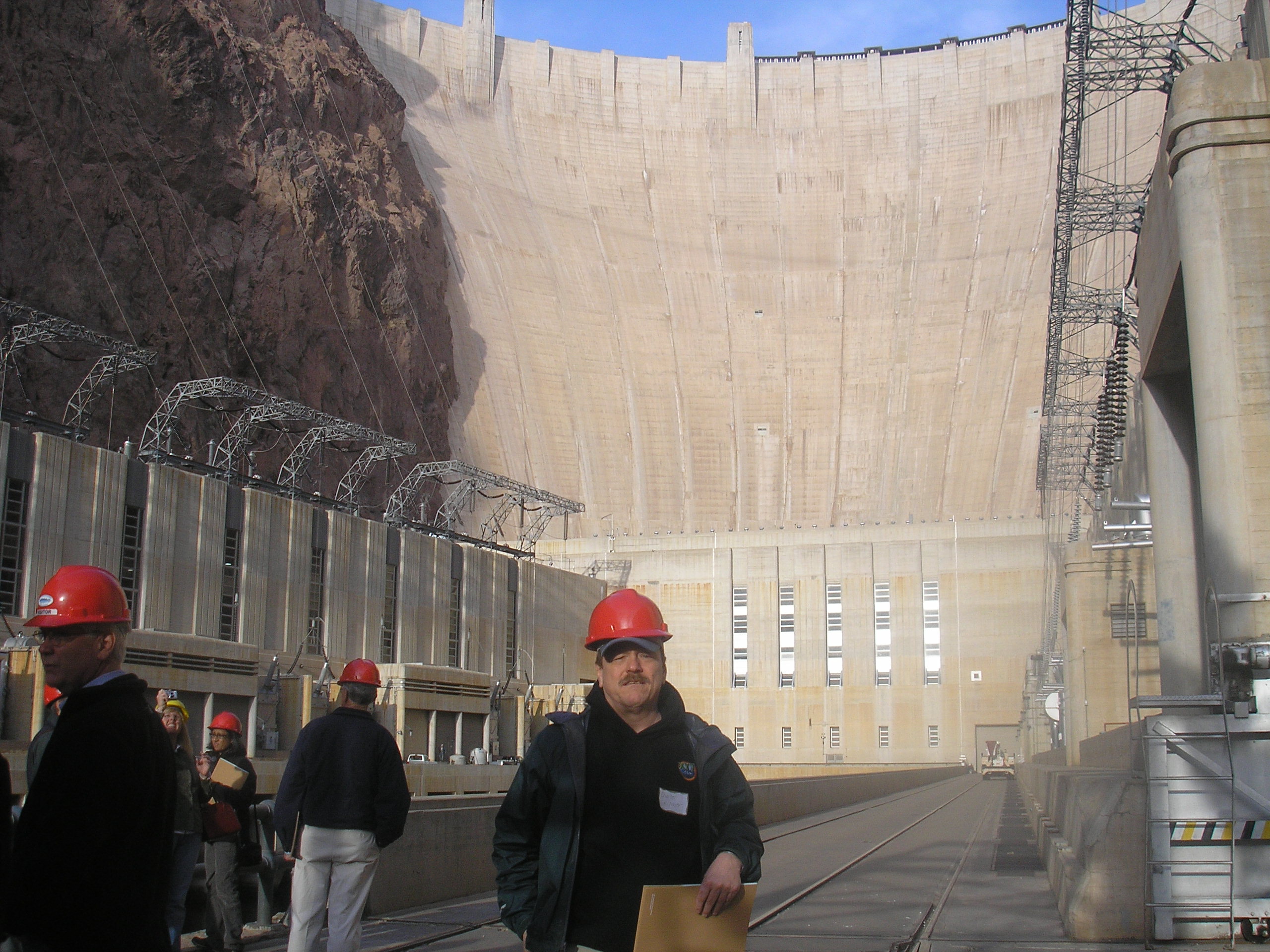

Hoover Dam

25th January 2008

Well I’ve had a little time to think about the MSSC Desalination Summit in Las Vegas Jan 16-18. I asked the same kinds of questions at this meeting as I did last August at the annual American Membrane Association conference. The effect was almost the same. Almost — but not quite. Patricia Mulroy, General Manager of the Southern Nevada Water Authority communicated the urgent need for action over the next 10 years. Also, it seemed a few of the guys at the conference caught a glimmer of what I was getting at.

Also, I had the impression that the Bureau Of Reclamation is moving toward taking a bigger role in water desalination research.

During one of the Q&A’s I mentioned that the Australians had responded to their drought by appropriating 250 million over 7 years to cut the cost of water desalination in half. What I didn’t mention was that their confidence that they could do so — came in part from American research. The announcement that they were going to appropriate 250 million for desalination research came four months after a visit by LLNL scientists to Australia to show how their carbon nanotubes could desalinate water without energy intensive pumps. Fresh water just passed through their membranes. That story was printed in every provincial Australian newspaper. In the USA that story never made it out of the science journals.

Pat Mulroy mentioned the relationship between energy and water. Everyone in desalination knows about this but nobody else does. It would be very helpful if Nevada people especially could be buttonholed to finance three sets of commercials for the Washington DC TV market–that made the link between water and energy. As well, a link should be made between the effects of climate change on the water supplies in the west, the southeast and even in the northeast. As mentioned in the conference even New York City has begun to think of the effects of sea water intrusions into their pristine water supply. The point is that climate change and population growth are not a regional problem. Finally a commercial for the Washington DC market should emphasize that the water solutions of the New Deal are no longer adequate for the growing populations and climate change that characterize the 21st century. The future is not what it used to be. These commercials would run for a year.

As mentioned in the Thursday morning Congressional Video Link Up–Washington staffers and congressmen know precious little about the desalination business. Therefor they don’t understand the link between energy research–for which there is a great deal of money available–and water desalination research–for which there is precious little money available for research. Some commercials establishing the link would make selling the link easier–and thereby ease the task of obtaining R&D funding.

One reason its important to make this link is that the likelihood of multi billion dollar increases in energy related R&D is increasing dramatically. Hillary has stressed the need for a significant increase in green research without being too specific. Sen. Barack Obama has called for “serious leadership to get us started down the path of energy independence.” All the republican candidates have stressed the need for energy independence. Mayor Giuliani said

“that weaning the United States off foreign oil must become a national purpose, that doing it within 10 to 15 years would be a centerpiece of a Giuliani presidency. The federal government must treat energy independence as a matter of national security,” he said, comparing it to the effort in the 1950’s and ’60’s to put men on the moon”

Sen. John McCain has declared, “We need energy independence”

He promised to make the U.S. oil independent within five years.The Senator says he’ll make it happen quickly, with a program like the Manhattan Project. That was the big push the U.S. made to build an atomic bomb before Germany could get one.

Notice the reference to the Manhattan project and the Moon Shot.

In the last couple of weeks, Mitt Romney has put up a dollar number for increasing increasing energy R&D. Romney

advocates increasing federal investments in energy, materials science, automotive technology and fuel technology from $4 billion a year — its current level — to $20 billion a year.

Why the the reference to war time projects like the moon shot and the manhattan project? And why have the time frames been shortened to 5-10 years? Its not just environmental or national security concerns. Now even big oil is buying into the peak oil argument. Shell Oil CEO Jeroen van der Veer this week wrote “Shell estimates that after 2015 supplies of easy-to-access oil and gas will no longer keep up with demand.” That means that unless crash programs are enacted to bring down demand for oil–especially in the USA–oil prices are going to the moon. One way or the other a radical rewrite of the energy picture is coming.

The picture of Hoover Dam tells pretty much the same story for water–and in the same time frame. Supplies are not keeping up with demand.

Mike Hightower of Sandia Labs mentioned on Thursday that alternative energy over the next couple years would become more economical than traditional energy sources. He said something similar happened to desalinized water 10 years ago.

After the American Membrane Association meeting last August I proposed spending 3 billion over 7-10 years– to research ways to collapse the cost of water desalination and transport so that desert water costs nearly the same as east coast water… And the east and gulf coasts would have a new source of cheap fresh water. In the context of current presidential campaign promises–my numbers now don’t seem so extravagant.

Its remarkable how water and energy production go hand and hand across several fields. The Hoover Dam produces both power and water. Waste heat from power plants on the coast will be used for desalination.

The same is true for research.

imho the primary targets for for desalination research: catalysts and semipermeable membranes are the same for hydrogen production. It may well be that both will see a need for smart pipelines.

These are things to consider as the water levels fall behind the Hoover dam. With water levels down officials are also considering the effects of water being so low the electrical generators may have to be shut down.

Looks like there will be a good snow pack this year in the Sierra Nevadas and the Rockies. If all goes well that will add one foot to lake levels. That’s a good year. But not so much when you consider that the lake is down 120 feet. At the conference we learned that current climate models in the southwest call for three in ten years as being good for precipitation. It used to be seven in ten years.